I do research

to save the life of our children

(you can check the numbers)

I wish to make driverless vehicles a reality by 2030~2050.

Publications free the ideas!

2016

The Cityscapes Dataset

M. Cordts,

M. Omran,

S. Ramos,

T. Scharwächter,

M. Enzweiler,

R. Benenson,

U. Franke,

,

B.

Schiele

Presented at CVPR workshop (unpublished)

Chef's recommendation

@inproceedings{Cordts2015Cvprw,

title={The Cityscapes Dataset},

author={Cordts, Marius and Omran, Mohamed and Ramos, Sebastian and Scharw{\"a}chter, Timo and Enzweiler, Markus and Benenson, Rodrigo and Franke, Uwe and Roth, Stefan and Schiele, Bernt},

booktitle={CVPR Workshop on The Future of Datasets in Vision},

year={2015}

}

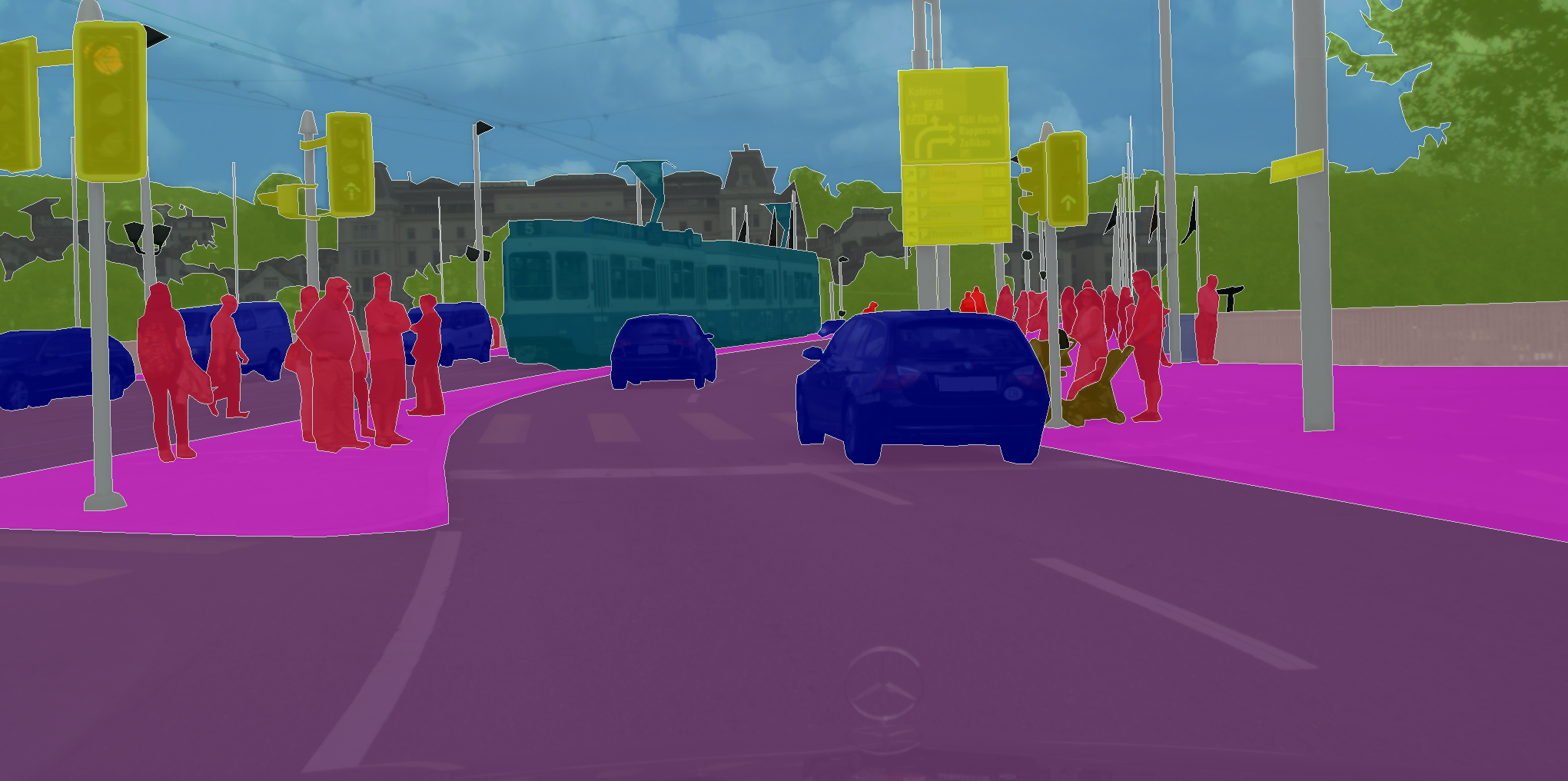

Cityscapes is a new large-scale dataset of diverse stereo video sequences recorded in street scenes from 50 different cities (central europe), with high quality semantic labelling annotations of 5 000 frames in addition to a larger set of 20 000 weakly annotated frames.

Visit project page to download the data, related scripts, and view results leaderboard.

Longer version accepted to CVPR 2016 (spotlight), camera ready in preparation.

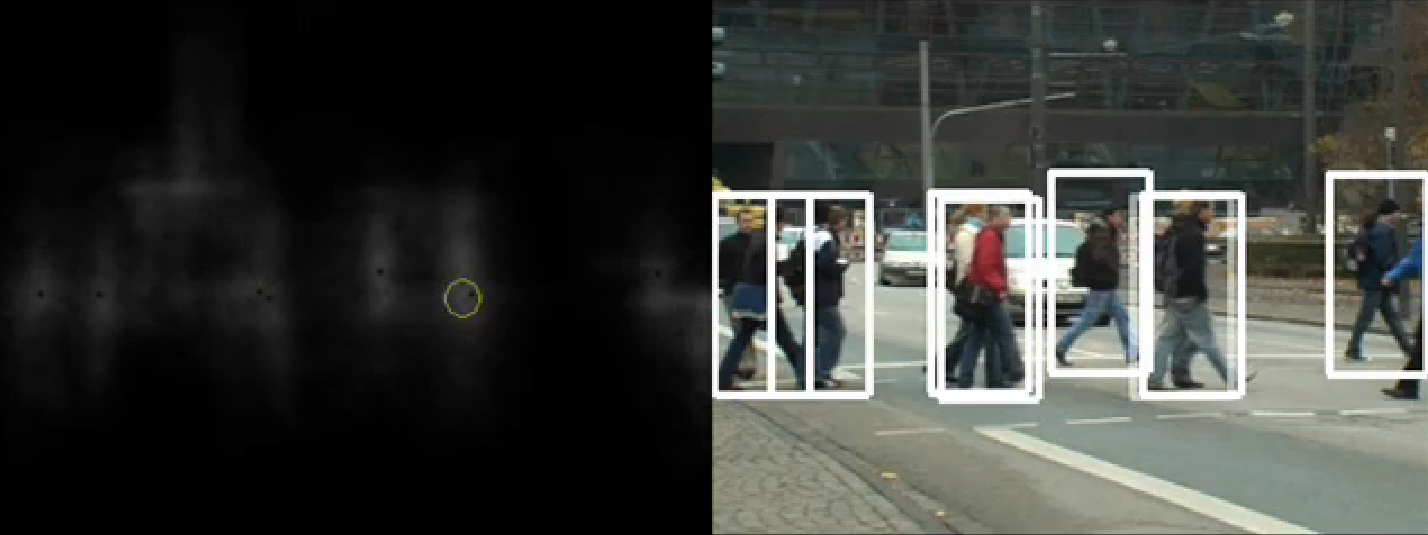

How far are we from solving pedestrian detection?

S.

Zhang, R. Benenson, M.

Omran, J.

Hosang, B.

Schiele

CVPR 2016

@inproceedings{Zhang2016Cvpr,

Author = {Shanshan Zhang and Rodrigo Benenson and Mohamed Omran and Jan Hosang and Bernt Schiele},

Title = {How Far are We from Solving Pedestrian Detection?},

Year = {2016},

booktitle = {CVPR}

}

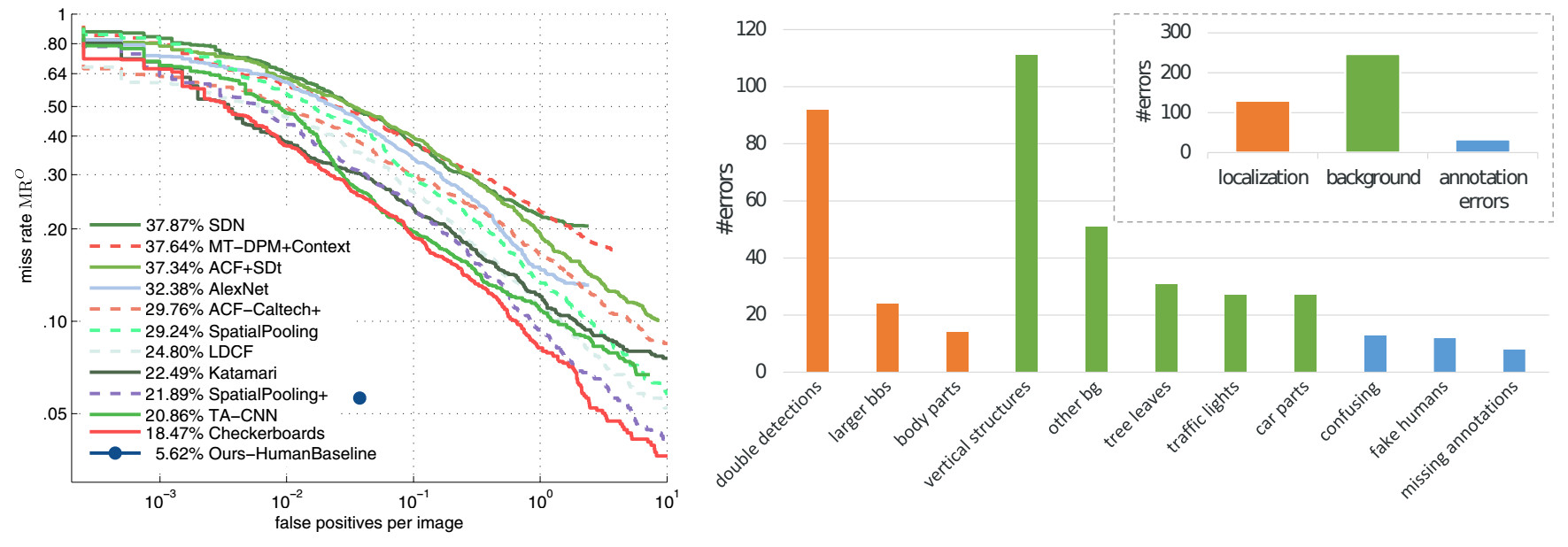

We evaluate a human baseline for Caltech pedestrian detection, analyse the weak areas of current detectors, push detection performance, and provide new improved the dataset annotations.

Visit project page to download the improved annotations.

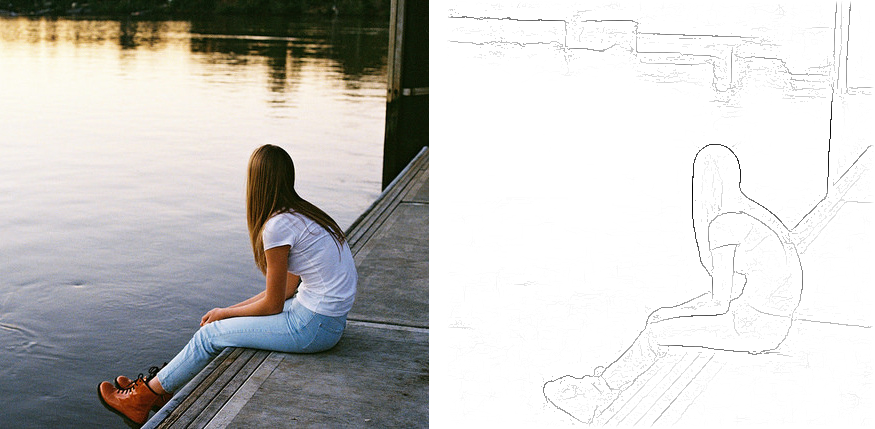

Weakly supervised object boundaries

A.

Khoreva, R. Benenson, M.

Omran, M.

Hein, B.

Schiele

CVPR 2016 (spotlight)

@inproceedings{Khoreva2016Cvpr,

title={Weakly Supervised Object Boundaries},

author={Khoreva, Anna and Benenson, Rodrigo and Omran, Mohamed and Hein, Matthias and Schiele, Bernt},

booktitle = {CVPR},

year={2016}

}

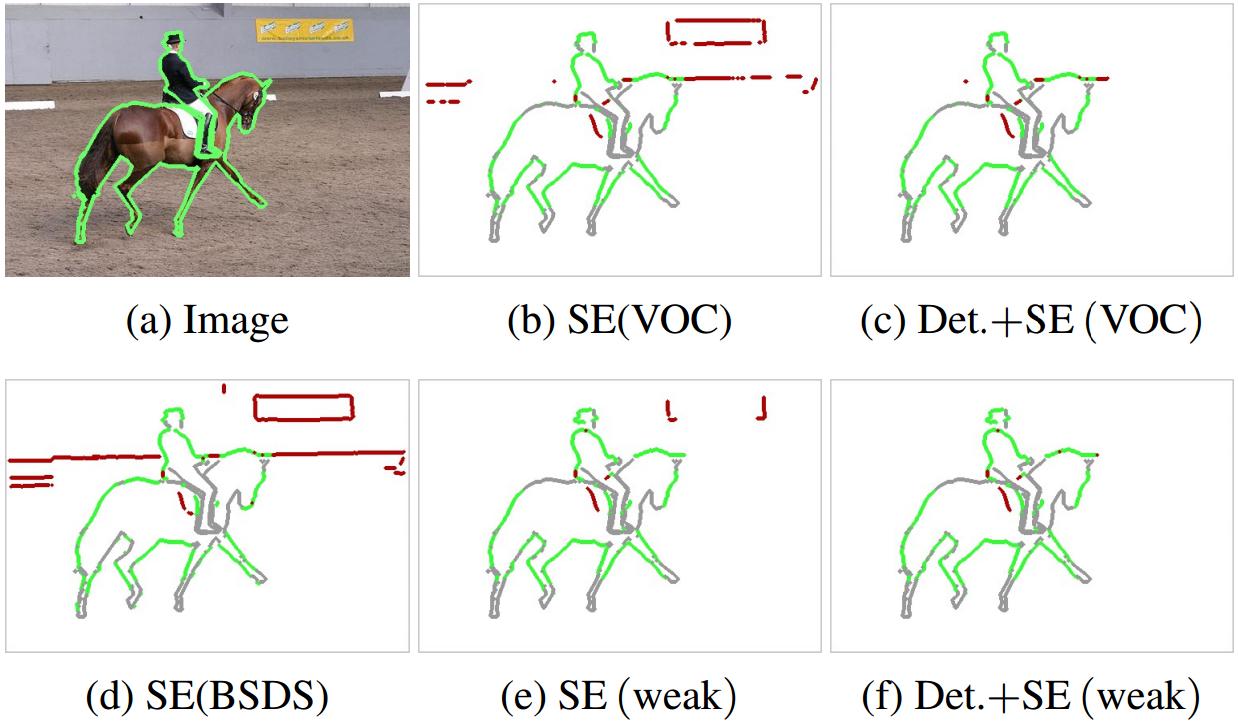

Using detection bounding box annotations, we show that it is possible to train surprisingly high quality class specific object boundary detectors.

2015 Listed as CVPR 2015 outstanding reviewer. On average over the year, received more than one citation per day

Person recognition in personal photo collections

S.

Oh, R. Benenson, M.

Fritz, B.

Schiele

ICCV 2015

@INPROCEEDINGS{Oh2015Iccv,

title={Person Recognition in Personal Photo Collections},

author={S. Oh and R. Benenson and M. Fritz and B. Schiele},

booktitle = {ICCV},

year={2015}}

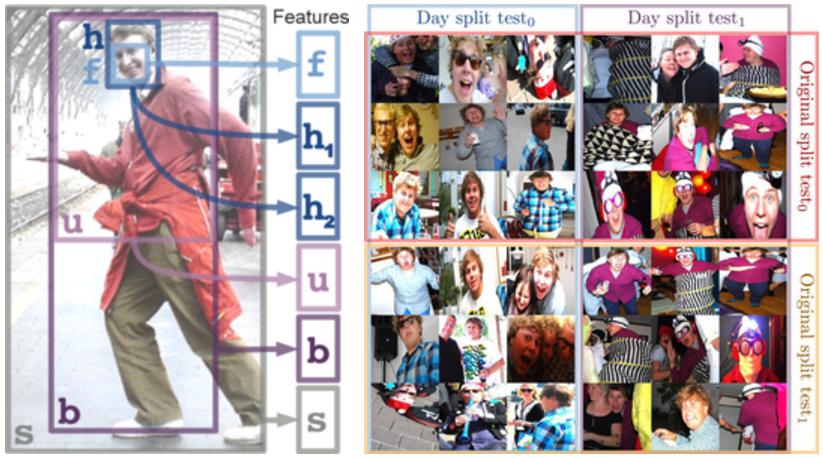

We show that state of the art person recognition in social media photos can be reached using straightforward convolutional network models.

Watch 1 minute summary video. Visit project page for extended annotations, code, and trained models.

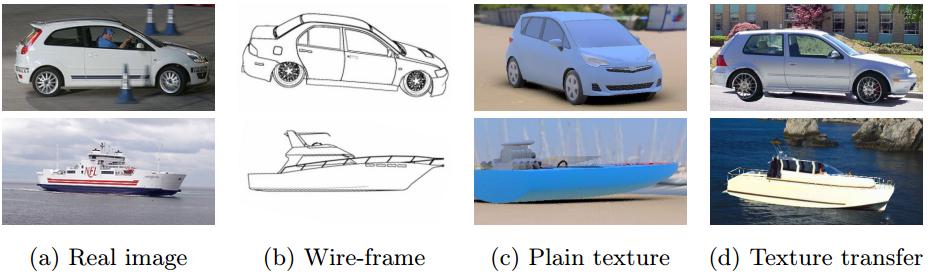

What is holding back convnets for detection?

B.

Pepik, R. Benenson, T.

Ritschel, B.

Schiele

GCPR 2015

@INPROCEEDINGS{Pepik2015Gcpr,

title = {What is Holding Back Convnets for Detection?},

author = {Pepik, Bojan and Benenson, Rodrigo and Tobias Ritschel and Schiele, Bernt}

booktitle = {German Conference on Pattern Recognition (GCPR)},

year = {2015}

}

We leverage the synthetic image renderings and the recent Pascal3D+ annotations to enable a new empirical analysis of the R-CNN detector. We aim at understanding "what can the network learn, and what it cannot ?", and report the best known results on the Pascal3D+ detection and viewpoint estimation tasks.

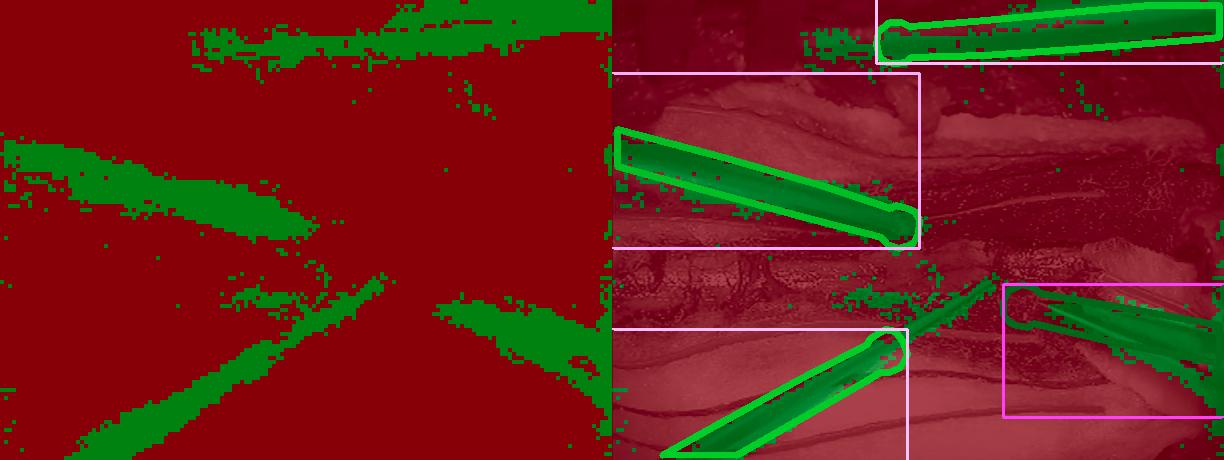

Detecting surgical tools by modelling local appearance and global shape

D.

Bouget, R. Benenson, M.

Omran, L.

Riffaud, B.

Schiele, P.

Jannin

TMI 2015

@ARTICLE{Bouget2015Tmi,

title = {Detecting Surgical Tools by Modelling Local Appearance and Global Shape},

journal = {IEEE Transactions on Medical Imaging (TMI)},

year = {2015},

author = {David Bouget and Rodrigo Benenson and Mohamed Omran and Laurent Riffaud and Bernt Schiele and Pierre Jannin}

}

We propose a new two-stage pipeline for tool detection and pose estimation in 2d surgical images. Compared to previous work, it overcomes assumptions made regarding the geometry, number, and tools positions.

We also release a new surgical tools dataset made of 2476 monocular images from neurosurgical microscopes during in-vivo surgeries, with pixel level annotation of the tools.

(Warning: the paper contains raw surgery images)

Visit project page to access the dataset.

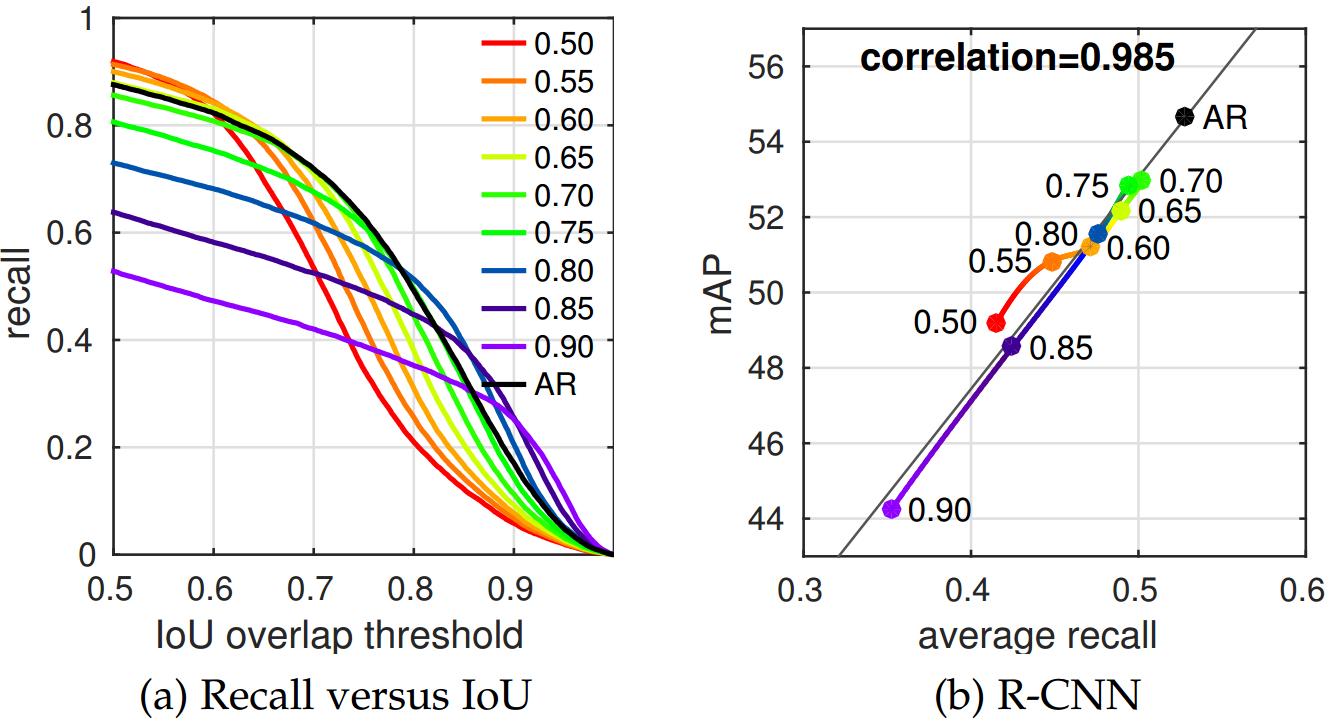

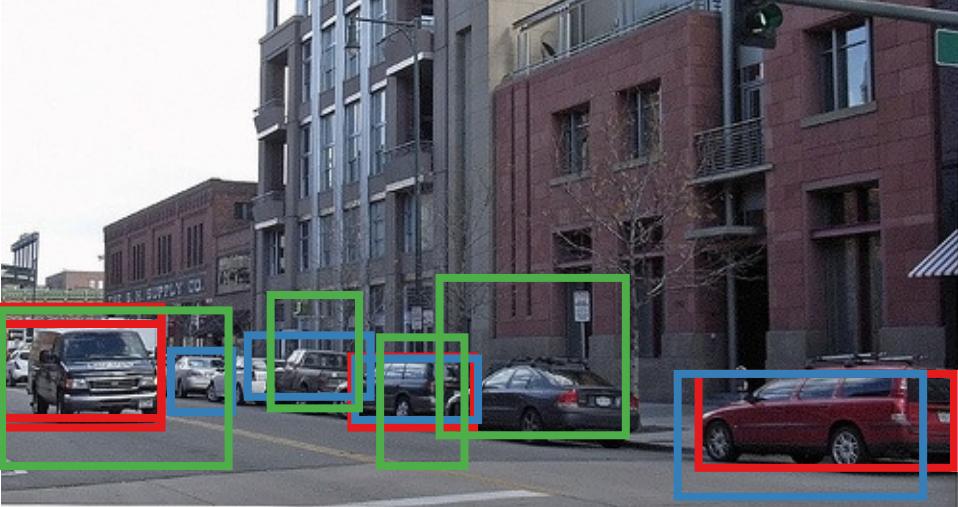

What makes for effective detection proposals?

J.

Hosang, R. Benenson, P.

Dollar, B.

Schiele

PAMI 2015

@ARTICLE{Hosang2015Pami,

author = {J. Hosang and R. Benenson and P. Dollár and B. Schiele},

title = {What makes for effective detection proposals?},

journal = {PAMI},

year = {2015}

}

Detection proposals allow to avoid exhaustive sliding window search across images, while keeping high detection quality. We provide an in depth analysis of proposal methods regarding recall, repeatability, and impact on DPM and R-CNN detector performance. Our findings show common strengths and weaknesses of existing methods, and provide insights and metrics for selecting and tuning proposal methods.

Visit project page for data and code downloads. This is the extended version of our BMVC 2014 paper.

Filtered channel features for pedestrian detection

S.

Zhang, R. Benenson, B.

Schiele

CVPR 2015

@INPROCEEDINGS{Zhang2015Cvpr,

author = {S. Zhang and R. Benenson and B. Schiele},

title = {Filtered channel features for pedestrian detection},

booktitle = {CVPR},

year = {2015}

}

We propose an unifying framework that covers multiple pedestrian detectors based on decision forests (ICF, ACF, SquareChnFtrs, LDCF, InformedHaar). Using only HOG+colour features we reach top performance on the Caltech-USA benchmark (overpassing the best reported convolutional networks results).

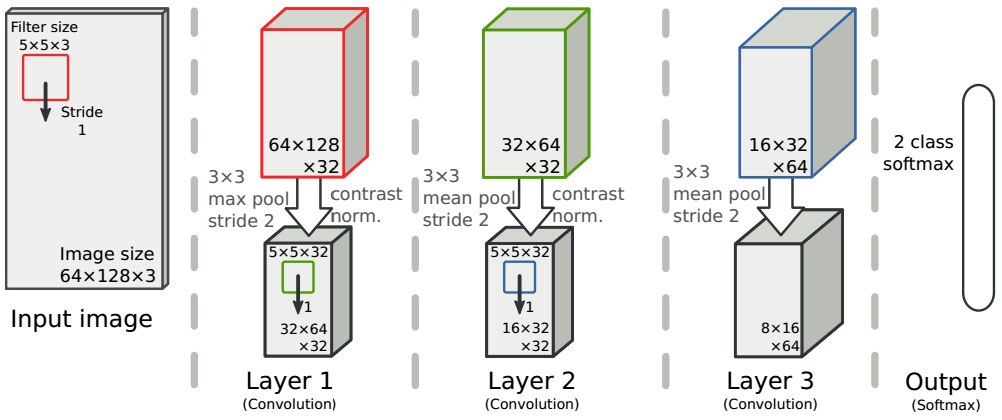

Taking a deeper look at pedestrians

J.

Hosang, M.

Omran, R. Benenson, B.

Schiele

CVPR 2015

@INPROCEEDINGS{Hosang2015Cvpr,

author = {J. Hosang and M. Omran and R. Benenson and B. Schiele},

title = {Taking a deeper look at pedestrians},

booktitle = {CVPR},

year = {2015}

}

We show that convolutional networks can reach better results for pedestrian detection than previously reported. We provide new best reported results (on Caltech-USA) under different training regimes (1x, 10x, and ImageNet pre-training).

Visit project page to download pre-computed results, and trained models.

2014 First time at BMVC

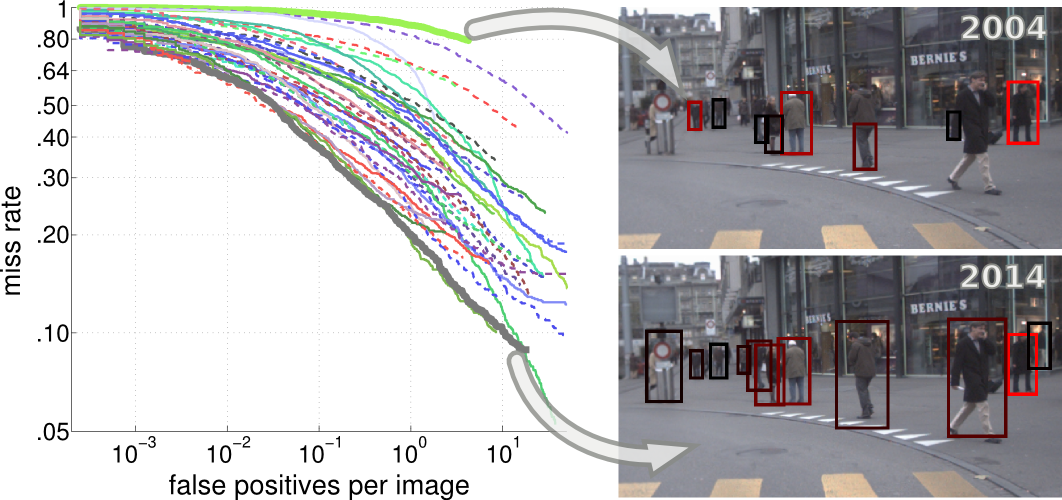

Ten years of pedestrian detection, what have we learned?

R. Benenson, M.

Omran, J.

Hosang, B.

Schiele

ECCV 2014, CVRSUAD

workshop

@INPROCEEDINGS{Benenson2014Eccvw,

author = {R. Benenson and M. Omran and J. Hosang and B. Schiele},

title = {Ten years of pedestrian detection, what have we learned?},

booktitle = {ECCV, CVRSUAD workshop},

year = {2014}

}

We review and discuss the 40+ detectors currently present in the Caltech pedestrian detection benchmark. We identify the strategies that have paid-off, and the ones that still have to show their promise.

By combining existing approaches we study their complementarity and report the current best known performance on the challenging Caltech-USA dataset.

How good are detection proposals, really?

J.

Hosang, R. Benenson, B.

Schiele

Oral at BMVC 2014, 7% acceptance rate

@INPROCEEDINGS{Hosang2014Bmvc,

author = {J. Hosang and R. Benenson and B. Schiele},

title = {How good are detection proposals, really?},

booktitle = {BMVC},

year = {2014}

}

Detection proposals allow to avoiding exhaustive sliding window search across images, while keeping high detection quality. We provide an in depth analysis of proposal methods regarding recall, repeatability, and impact on DPM detector performance.

Our findings show common weaknesses of existing methods, and provide insights to choose the most adequate method for different settings.

Visit project page for more information.

See also updated and extended PAMI journal version available at arXiv.org.

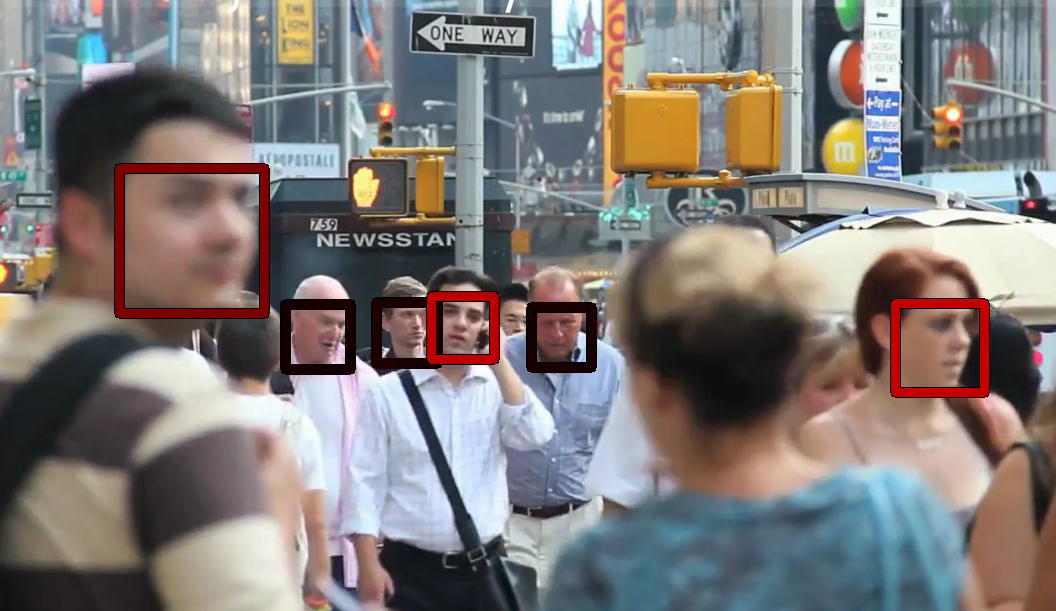

Face detection without bells and whistles

M.

Mathias, R. Benenson, M.

Pedersoli, L. Van Gool

Oral at ECCV 2014, 2.8% acceptance rate

Chef's recommendation

@INPROCEEDINGS{Mathias2014Eccv,

author = {M. Mathias and R. Benenson and M. Pedersoli and L. {Van Gool}},

title = {Face detection without bells and whistles},

booktitle = {ECCV},

year = {2014}

}

We present two surprising new face detection results: one using a vanilla deformable part model, and the other using only rigid templates (similar to Viola&Jones' detector).

Both of these reach top performance, improving over commercial and research systems.

We also discuss issues with existing evaluation benchmarks and propose an improved evaluation procedure.

Watch example result video A, or result video B.

Download presentation slides. Watch ECCV talk.

Visit project page for more information (evaluation code and trained models).

2013 Listed as CVPR 2013 outstanding reviewer

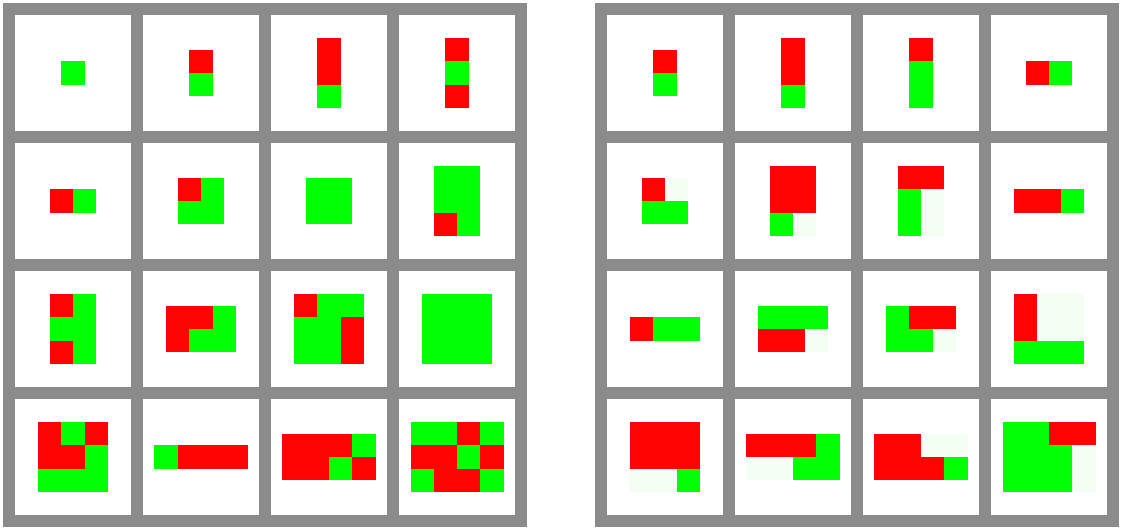

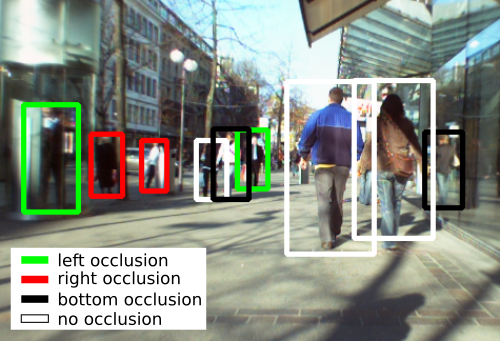

Handling occlusions with franken-classifiers

M.

Mathias, R. Benenson, R.

Timofte, L. Van Gool

ICCV 2013

@INPROCEEDINGS{Mathias2013Iccv,

author = {M. Mathias and R. Benenson and R. Timofte and L. {Van Gool}},

title = {Handling Occlusions with Franken-classifiers},

booktitle = {ICCV},

year = {2013}

}

Detecting partially occluded pedestrians is challenging.

Here we study why our classifier performs poorly under occlusion and we show that using a set of occlusion specific classifiers can significantly improve detection quality. Our proposed franken-classifiers obtain sub-linear grows in training and testing time, while keeping high detection quality.

The presented poster.

Seeking the strongest rigid detector

R. Benenson*, M.

Mathias*, T.

Tuytelaars, L. Van Gool

(* indicates

equal contribution.)

CVPR 2013

Chef's recommendation

@INPROCEEDINGS{Benenson2013Cvpr,

author = {R. Benenson and M. Mathias and T. Tuytelaars and L. {Van Gool}},

title = {Seeking the strongest rigid detector},

booktitle = {CVPR},

year = {2013}

}

Previous work to boost detection quality explored using non-linear SVMs, more sophisticated features, geometric priors, motion information, deformable models, or deeper architectures. We use none of these.

Using a single rigid classifier per candidate detection window we reach or improve state-of-the-art performance for pedestrian detection on INRIA, ETH and Caltech USA datasets.

Watch result video, and the presented poster.

Traffic Sign Recognition - How far are we from the solution?

M.

Mathias*, R.

Timofte*, R. Benenson, L. Van Gool

(* indicates

equal contribution.)

IJCNN 2013

@INPROCEEDINGS{Mathias2013Ijcnn,

author = {M. Mathias and R. Timofte and R. Benenson and L. {Van Gool}},

title = {Traffic Sign Recognition - How far are we from the solution?},

booktitle = {ICJNN},

year = {2013}

}

We show that, without any application specific modification, existing methods for pedestrian detection and face recognition; can reach performances in the range of 95%∼99% of the perfect solution on current traffic sign datasets.

Watch result video.

This work was one of the winners of the german traffic sign detection benchmark, see details in related publication.

2012 First time at CVPR and ECCV. Obtains best CVVT workshop paper award

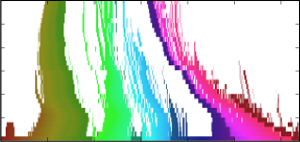

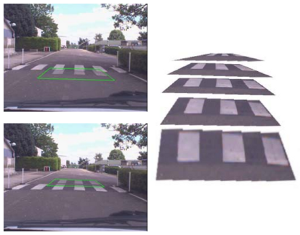

Fast stixels estimation for fast pedestrian detection

R. Benenson, M.

Mathias, R.

Timofte, L. Van Gool

ECCV 2012, CVVT

workshop

Selected best paper of the workshop.

@INPROCEEDINGS{Benenson2012EccvCvvtWorkshop,

author = {R. Benenson and M. Mathias and R. Timofte and L. {Van Gool}},

title = {Fast stixels estimation for fast pedestrian detection},

booktitle = {ECCV, CVVT workshop},

year = {2012}

}

We revisit the stixel computation method.

Stixels computation now reaches 200 Hz (300 Hz latest version), and we can detect pedestrians at the record speed of 165 Hz (with state of the art quality).

Watch result video, and the presentation slides.

Source code available, with a research only license.

Stixels motion estimation without optical flow computation

B. Gunyel, R. Benenson, R.

Timofte, L. Van Gool

ECCV 2012

@INPROCEEDINGS{Gunyel2012Eccv,

author = {B. Gunyel and R. Benenson and R. Timofte and L. {Van Gool}},

title = {Stixels motion estimation without optical flow computation},

booktitle = {ECCV},

year = {2012}

}

We estimate the motion of objects without computing optical flow.

Watch a short result video, and the presented poster.

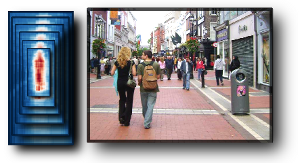

Pedestrian detection at 100 frames per second

R. Benenson, M.

Mathias, R.

Timofte, L. Van Gool

Oral at CVPR 2012, 2.5% acceptance rate

Chef's recommendation

@INPROCEEDINGS{Benenson2012Cvpr,

author = {R. Benenson and M. Mathias and R. Timofte and L. {Van Gool}},

title = {Pedestrian detection at 100 frames per second},

booktitle = {CVPR},

year = {2012}

}

We propose a new detector that improves both speed and quality over state-of-the-art single part detectors. We reach 50 Hz in monocular setup, and 135 Hz when using stixels on a street scene (including the stereo processing time).

Download presentation slides. Watch CVPR talk.

Watch CVPR result video.

Watch Europa project result video, our detector running inside a robot, together with a tracker from RWTH Aachen.

Source code available, with a research only license.

2011 First time at ICCV

Stixels estimation without depth map computation

R. Benenson, R.

Timofte, L. Van Gool

ICCV 2011, CVVT workshop

@INPROCEEDINGS{Benenson2011IccvWorkshop,

author = {R. Benenson and R. Timofte and L. {Van Gool}},

title = {Stixels estimation without depthmap computation},

booktitle = {ICCV, CVVT workshop},

year = {2011}

}

We detect (unknown) objects on a street scene faster than it takes to compute a depth map.

Source code available, with a research only license.

2010 First year at Visics, scratching my head

2009 Working on industrial applications

2008 Got my PhD!

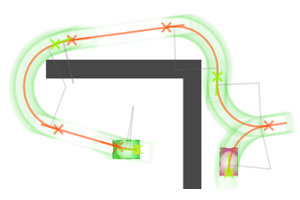

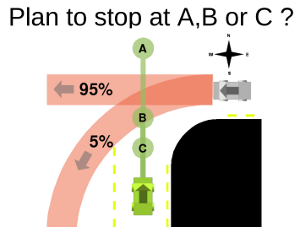

Achievable safety of driverless ground vehicles

R. Benenson, T.

Fraichard, M. Parent

ICARCV 2008

Chef's recommendation

@INPROCEEDINGS{Benenson2008Icarcv,

author = {R. Benenson and T. Fraichard and M. Parent},

title = {Achievable safety of driverless vehicles},

booktitle = {ICARCV},

year = {2008},

url = {http://hal.inria.fr/inria-00294750}

}

Which safety guarantees can we give for autonomous vehicles?

Under which conditions these guarantees hold?

Which guarantees can we give during extraordinary situations?

Perception for driverless vehicles

R. Benenson

PhD thesis, Mines

Paristech 2008

@PHDTHESIS{Benenson2008PhD,

author = {Rodrigo Benenson},

title = {Perception for driverless vehicles in urban environment},

school = {Ecole des Mines de Paris},

year = {2008},

url = {http://pastel.paristech.org/5327}

}

How to design a driverless vehicle ?

Core contributions in the safety design, perception algorithm, and a proof-of-concept system integration.

Design of an urban driverless ground vehicle

R. Benenson, M. Parent

IROS 2008

Chef's recommendation

@INPROCEEDINGS{Benenson2008Iros,

author = {R. Benenson and M. Parent},

title = {Design of a driverless vehicle for urban environment},

booktitle = {IROS},

year = {2008},

url = {http://hal.inria.fr/inria-00295152}

}

Updated version of our IROS 2006 work.

Introduction of the harm function, integration with global planning system, (primitive) use of vision for traversability estimation.

Towards urban driverless vehicles

R. Benenson, S. Petti, T.

Fraichard, M. Parent

International Journal of

Vehicle Autonomous Systems 2008

@ARTICLE{Benenson2008Ijvas,

author = {Rodrigo Benenson and St{\'e}phane Petti and Thierry Fraichard and Michel Parent},

title = {Toward urban driverless vehicles},

journal = {Int. Journal of Vehicle Autonomous Systems, Special Issue on Advances in Autonomous Vehicle Technologies for Urban Environment},

year = {2008},

volume = {1},

pages = {4 - 23},

number = {6},

url = {http://hal.inria.fr/inria-00115112}

}

Journal version of our IROS 2006 work.

2007 First time at ICRA

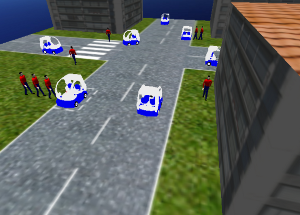

Cybernetic transportation systems design and development: simulation software

S. Boissé, R. Benenson, L. Bouraoui, M. Parent, L.

Vlacic

ICRA 2007

This paper describes the simulator we developed in order to validate our on-board software in single and multiple vehicles scenarios.

Networking needs and solutions for road vehicles at IMARA

O.

Mehani, R. Benenson, S.

Lemaignan, T. Ernst

ITST 2007

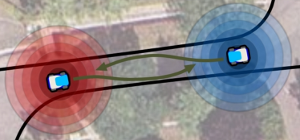

Which are the communications need for driverless vehicles?

2006 First time at IROS

Integrating perception and planning for autonomous navigation of urban vehicles

R. Benenson, S. Petti, M. Parent, T.

Fraichard

IROS 2006

@INPROCEEDINGS{Benenson2006Iros,

author = {Rodrigo Benenson and St{\'e}phane Petti and Michel Parent and Thierry Fraichard},

title = {Integrating Perception and Planning for Autonomous Navigation of Urban Vehicles},

booktitle = {IROS},

year = {2006},

url = {http://hal.inria.fr/inria-00086286}

}

Motion estimation, online static map building, moving objects detection and tracking, safe planning and control; all of it running in real-time on single PC, controlling an electric vehicle.

See the proof of concept video.

Real-time vehicle motion estimation using texture learning and monocular vision

Y. Dumortier, R. Benenson, M. Kais

ICCVG 2006

On how we do motion estimation using the ground texture.

2005 First year of PhD

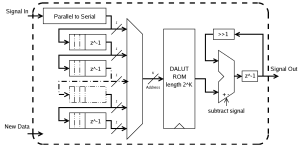

Diseño e implementación de un receptor digital de radio

R. Benenson

Engineer thesis (in Spanish), UTFSM

2004

Copyright Notice

The downloadable documents on this web site are presented to ensure timely dissemination of scholarly and technical work. Copyright and all rights therein are retained by authors or by other copyright holders. All persons copying this information are expected to adhere to the terms and constraints invoked by each author's copyright. These works may not be reposted without the explicit permission of the copyright holder.

Code free the sources!

Due to industrial partnerships, part of the code I write is

closed source.

Happily, I was authorized to release the source code

of my work on fast pedestrians

detection and face detection.

Other than writing my own original research code, I have also ported a couple of algorithms developed by other authors:

- Python port of the circulant matrix tracker by detection, by João F. Henriques.

- Linux port of Pedestrian detection using Hough forest, by Olga Barinova.

- Javascript port of boundaries estimation using structured forests, by Piotr Dollár.

Otherwise, here is a list of interesting open-source code I have used:

- cudatemplates, makes my life with CUDA (GPU) code much easier.

- ROS, is a good enough tool to create modular robotic software.

- I believe research must use open source tools. My current combo is C++ + QtCreator + numpy + scipy + matplotlib + spyder.

Data free the bits!

-

Are we there yet ?

-

Did you ever want to quickly learn which paper provides the best results on standard dataset X ?

I have created a mini-site to aggregate in one place results on key computer vision datasets.

The results set is crowd sourced. You are welcome to provide inputs and comments.

Below, some of the datasets I have used during my research:

- INRIA persons, the standard dataset for pedestrians.

- Caltech pedestrians, a (much) larger dataset of pedestrians.

- ETHZ pedestrians, one of the rare color stereo sequences with annotated pedestrians.

Contact who is rodrigob?

My name is Rodrigo Benenson.

I studied electronics engineering at UTFSM.

I did my PhD in robotics inside the Lara team, part of Mines Paristech and INRIA.

I then moved for a post-doc in computer vision at Visics, KU Leuven.

I currently continue my research at the Max-Planck-Institut für Informatik, in Saarbrücken, Germany.

You can contact me by sending an email at rodrigoDOTbenensonATgmailDOTcom.

我想建设一个更美好的世界。

Page created using bootstrap.